The project has been busy over the last few months! As announced in our last news, our partners and we have been able to get down to work, and our Software Engineering Expert has indeed been recruited – welcome to Lucas Detto!

Axis 1: Fine assessment of patient mobility in VR (LCOMS,DEVAH)

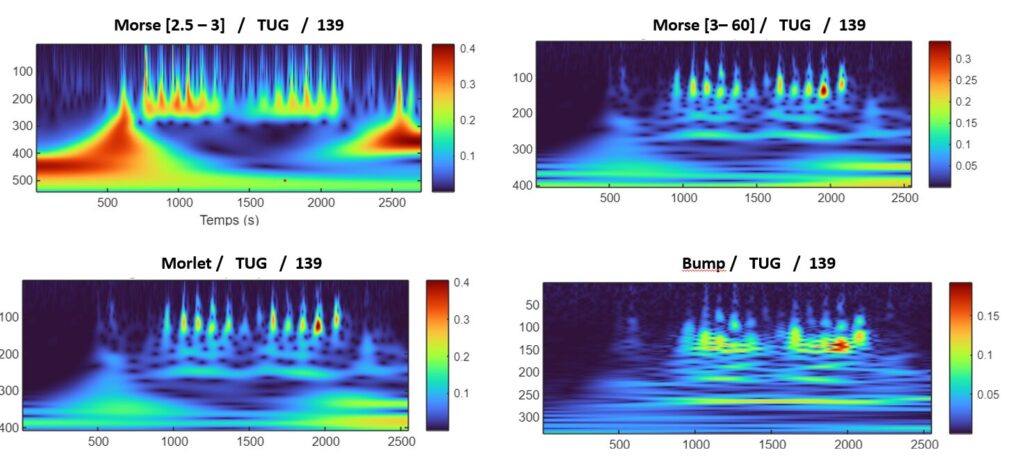

In parallel with the MAIF project, we have funding for a thesis on the subject of “fine assessment of mobility in elderly patients”. Kaoutar El Ghabi has been recruited for this thesis, and her work will involve processing all the data from the trials using deep learning methods. In the first phase, she carried out a major bibliographical study on the assessment of mobility in the elderly. A review article entitled“Mobility Assessment for Healthy Older Adults: A Systematic Review” is currently being evaluated.

She is now working on processing the raw data from the IMUs to shape them according to the deep learning models used.

As the digital tools are not yet operational, she needs data to start studying the different machine learning/deep learning methods that can be used in her research. To this end, we have collaborated with the University of Kiel to gain access to its database of elderly people’s movements (including various walking tests and daily activities).

Since last time, we’ve rejuvenated our hardware by buying the latest generation to make it lighter and more efficient. To guarantee the continuity of the ecosystem, we’ve kept the Vive manufacturer. So we swapped our Vive Pro for a Vive XR Elite. It’s much lighter than the old one, with better image quality, enables augmented reality and, above all, eye-tracking capability, which we hope will be very useful data for our experiments. Alongside this, we’ve also changed the body trackers to lighter, new-generation optical trackers. And all this without external beacons and joysticks, in the hope that this less restrictive equipment will be more pleasant for patients.

In order to assess the potential differences in behavior between augmented reality and virtual reality, we have 3D modeled our experiment room at the LCOMS. Here’s a sneak preview of the prototype in video with the new hardware, during an obstacle spanning exercise:

We’re now looking to define the exact experimental protocol so that we can start testing to go toward gathering real data.

Axis 2: Remobilizing the patient via VR/XR exergame (Mist Studio, Dinertia, LCOMS, Staps)

Our partner Mist Studio, in partnership with the 2LPN laboratory, has carried out a study to define the graphic universe best suited to our target population. This study is currently being published. This user-centered study, using the “focus group” technique, enabled us to orientate more precisely the artistic choices for the universe of the serious remobilization game.

With a view to proposing an application to remobilize patients independently at home, we are looking to develop a human pose recognition prototype to offer patients feedback on exercises. We are working in two areas:

A collaboration with Dinertia, a company specializing in tele-consultation physiotherapy sessions, to obtain recordings of exercise movements performed by professionals, as well as an application enabling these to be viewed and manipulated.

As mentioned in our previous news, a student from the Télécom Nancy engineering school was offered a project involving light remobilization using a webcam and posture recognition using AI tools. The output of this project is an application that detects posture using AI on two cameras in stereoscopic view, then combines the two 2D images of the postures to create a complete 3D posture.

The current objective would be to be able to obtain the patient’s posture by AI, in order to compare it with the posture of the Dinertia exercises, to give feedback to the patient: raise the arm, straighten the leg etc… We are currently trying to improve the accuracy of posture recognition, so as to be able to make a more accurate comparison with the references.

Area 3: Autonomous patient mobility monitoring (Cybernano)

Our partner Cybernano is continuing its work on autonomous mobility monitoring. The choice of sensors has been finalized, and the data transfer architecture and hardware access libraries have been defined and implemented in a “POC” (Proof of Concept). Now it’s a question of making the various transmission bricks reliable by means of a real-life test.